728x90

1. Drain3

- 자연어로 이루어진 데이터들의 Template을 자동적으로 만들어주는 라이브러리

# 라이브러리 설치

pip install drain32. CODE

GitHub - logpai/Drain3: A robust streaming log template miner based on the Drain algorithm

A robust streaming log template miner based on the Drain algorithm - logpai/Drain3

github.com

- examples 폴더의 drain3.ini와 drain_bigfile_demo.py, drain_stdin_demo.py만 봐도 기본적인 drain3가 사용가능

- 위를 바탕으로 아래에 코드 재 정리

1) LogTemplate을 구하여 저장

import json

import logging

import os, sys, time

from os.path import dirname

from drain3 import TemplateMiner

from drain3.template_miner_config import TemplateMinerConfig

from drain3.file_persistence import

filename = "./logdata.txt"

save_bin = "./save_bin.bin" #bin파일로 저장

save_json = "./save_json.json" #bin파일은 압축하여 저장하기 때문에 시각적으로 보고싶다면 사용

# logdata 불러오기

with open(filename) as f:

log_lines =f.readlines()

logger=logging.getLogger(__name__)

logging.basicConfig(stream=sys.stdout, level=logging.INFO, format='%(message)s')

persistence=FilePersistence(save_bin)

# Initialize

config=TemplateMinerConfig()

template_miner=TemplateMiner(persistence,config=config)

line_count=0

start_time=time.time()

batch_start_time=start_time

batch_size=10000

for line in log_lines:

line=line.rstrip()

result=template_miner.add_log_message(line)

line_count+=1

if result["change_type"]!="none":

result_json=json.dumps(result)

template=result["template_mined"]

time_took=time.time()-start_time

rate=line_count/time_took

logger.info(f"--- Done processing file in {time_took:.2f} sec. Total of {line_count} lines, rate {rate:.1f} lines/sec, "

f"{len(template_miner.drain.clusters)} clusters")

#sorted_clusters = sorted(template_miner.drain.clusters, key=lambda it: it.size, reverse=True)

#Log Template 저장 - 시각적으로 보기 위한 용도

with open(save_json, "w") as f:

template_miner.drain.print_tree(f)2) 저장한 LogTemplate 불러오기

import json

import logging

import os, sys, time

from os.path import dirname

from drain3 import TemplateMiner

from drain3.template_miner_config import TemplateMinerConfig

from drain3.file_persistence import FilePersistence

filename = "./logdata2.txt"

load_bin = "./save_bin.bin"

save_txt = "./save_txt.txt"

# logdata 불러오기

with open(filename) as f:

log_lines =f.readlines()

# 저장한 bin파일 불러오기

persistence=FilePersistence(load_bin)

#Initialize

config=TemplateMinerConfig()

template_miner=TemplateMiner(persistence, config=config)

# Template Matching

for line in log_lines:

cluster=template_miner.match(line)

template=cluster.get_template()

with open(save_txt, "a+") as f:

f.write('{},{}'.format(cluster.cluster_id, template)) # Template ID와 Template 저장

f.write(','.join(template_miner.get_parameter_list(template,line))) # Masking 된 원소 추출

f.write('\n')3. Initialize

1) Default Initialize

drain_depth =4

drain_extra_delimiters =[]

drain_max_children =100

drain_max_clusters =None

drain_sim_th =0.4

mask_prefix ='<'

mask_suffix ='>'

masking_instructions =[]

parameter_extraction_cache_capacity =3000

parametrize_numeric_tokens =True

profiling_enabled =False

profiling_report_sec =60

snapshot_compress_state =True

snapshot_interval_minutes =5- drain depth : max depth levels of log clusters. Minimum is 3.

- extra_delimiters : delimiters to apply when splitting log message into words (in addition to whitespace).

Format is a Python list e.g. ['_', ':']. - max_children : max number of children of an internal node

- max_clusters : max number of tracked clusters

- sim_th : similarity threshold

- mask_prefix, mask_suffix : the wrapping of identified parameters in templates.

- masking_instructions : arameters masking

- parameter_extraction_cache_capacity :

- parameterize_numeric_tokens L

- profiling_enabled : Added time prifiling (True/False)

- profiling_report_sec : profiling report second

- snapshot_compress_state : whether to compress the state before saving it.

- snapshot_interval_minutes : time interval for new snapshot

2) 사용자가 직접 만들 수 있음

[SNAPSHOT]

snapshot_interval_minutes = 10

compress_state = True

[MASKING]

masking = [

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)(([0-9a-f]{2,}:){3,}([0-9a-f]{2,}))((?=[^A-Za-z0-9])|$)", "mask_with": "ID"},

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)(\\d{1,3}\\.\\d{1,3}\\.\\d{1,3}\\.\\d{1,3})((?=[^A-Za-z0-9])|$)", "mask_with": "IP"},

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)([0-9a-f]{6,} ?){3,}((?=[^A-Za-z0-9])|$)", "mask_with": "SEQ"},

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)([0-9A-F]{4} ?){4,}((?=[^A-Za-z0-9])|$)", "mask_with": "SEQ"},

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)(0x[a-f0-9A-F]+)((?=[^A-Za-z0-9])|$)", "mask_with": "HEX"},

{"regex_pattern":"((?<=[^A-Za-z0-9])|^)([\\-\\+]?\\d+)((?=[^A-Za-z0-9])|$)", "mask_with": "NUM"},

{"regex_pattern":"(?<=executed cmd )(\".+?\")", "mask_with": "CMD"}

]

mask_prefix = <:

mask_suffix = :>

[DRAIN]

# engine is Optional parameter. Engine will be "Drain" if the engine argument is not specified.

# engine has two options: 'Drain' and 'JaccardDrain'.

# engine = Drain

sim_th = 0.4

depth = 4

max_children = 100

max_clusters = 1024

extra_delimiters = ["_"]

[PROFILING]

enabled = True

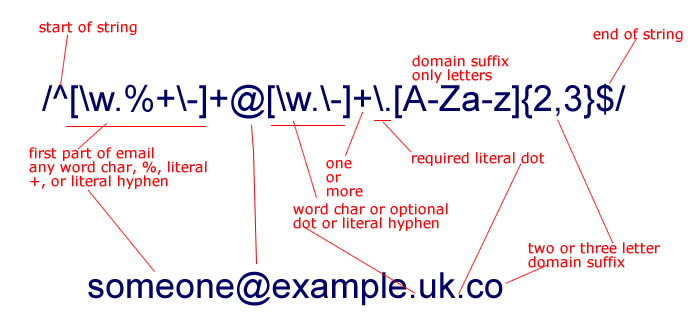

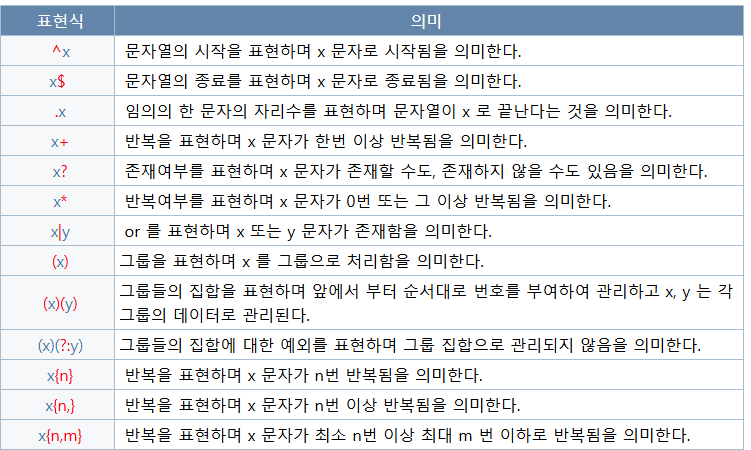

report_sec = 30- masking은 정규표현식 구조로 사용하면 됨

정규표현식 (Regex) 정리

정규표현식은 아주 가끔 쓰기때문에 항상 다시 볼때마다 헷갈리곤 하기에 주요 사용예를 내가 나중에 다시 봤을 때 편하도록 정리하여 보았다. 정규 표현식의 용어들정규 표현식에서 사용되는

hamait.tistory.com

3. 저장한 Initialize 사용하기

config=TemplateMinerConfig()

config.load("./drain3.ini")4. Drain3 결과

Template 저장시 json파일을 열어보면 아래와 같이 저장된다.

<root>

<L=24>

"<TEST>" (cluster_count=1)

ID=1 : size=4610 : <TEST> <:DATE:> <:TIME:> <:*:> <:*:> <:*:> <:*:> System <:HEX:> MAIN <:*:>

"<ERROR>" (cluster_count=1)

ID=4 : size=23381 : <ERROR> <:DATE:> <:TIME:> <:*:> <:*:>

"<WARNING>" (cluster_count=1)

ID=73 : size=193 : <WARNING> <:DATE:> <:TIME:> <:*:>- ID: Cluster ID로 Template ID로 보면 됨

- Size: 이 Template에 해당하는 데이터 갯수

실제 예시 데이터로 테스트 해보면

<TEST> 2020-01-01 00:00:00 It is a test! System 1234 MAIN 1234아래와 같은 결과를 얻는다.

1,<TEST> <:DATE:> <:TIME:> <:*:> <:*:> <:*:> <:*:> System <:HEX:> MAIN <:*:>

2020-01-01,00:00:00,It,is,a,test!,1234,1234

728x90

'DeepLearning > NLP' 카테고리의 다른 글

| [Word2Vec] CBOW - Python 코드 (0) | 2025.02.25 |

|---|---|

| [NLP] 텍스트 데이터 전처리 - Log Template (1) | 2025.01.13 |

| [NLP] Transformer - BERT (1) | 2025.01.11 |

| [NLP] Encoder-Decoder와 Attention (2) | 2025.01.06 |

| [NLP] 텍스트 데이터 전처리 (2) | 2025.01.05 |